Generative Dictionaries:

WordHack Open Projector Talk

The following is a textual reproduction of an open mic lightning talk I gave about different generative dictionary projects and techniques, along with the slides I used for it. It was based on a list of such projects I compiled here.

The original talk was given on November 19, 2020 at WordHack, via Babycastles.

A video recording is available on YouTube (mirror).

– Robin Hill

|

Hello everyone! My name is Robin Hill.

|

|

··· | |

|

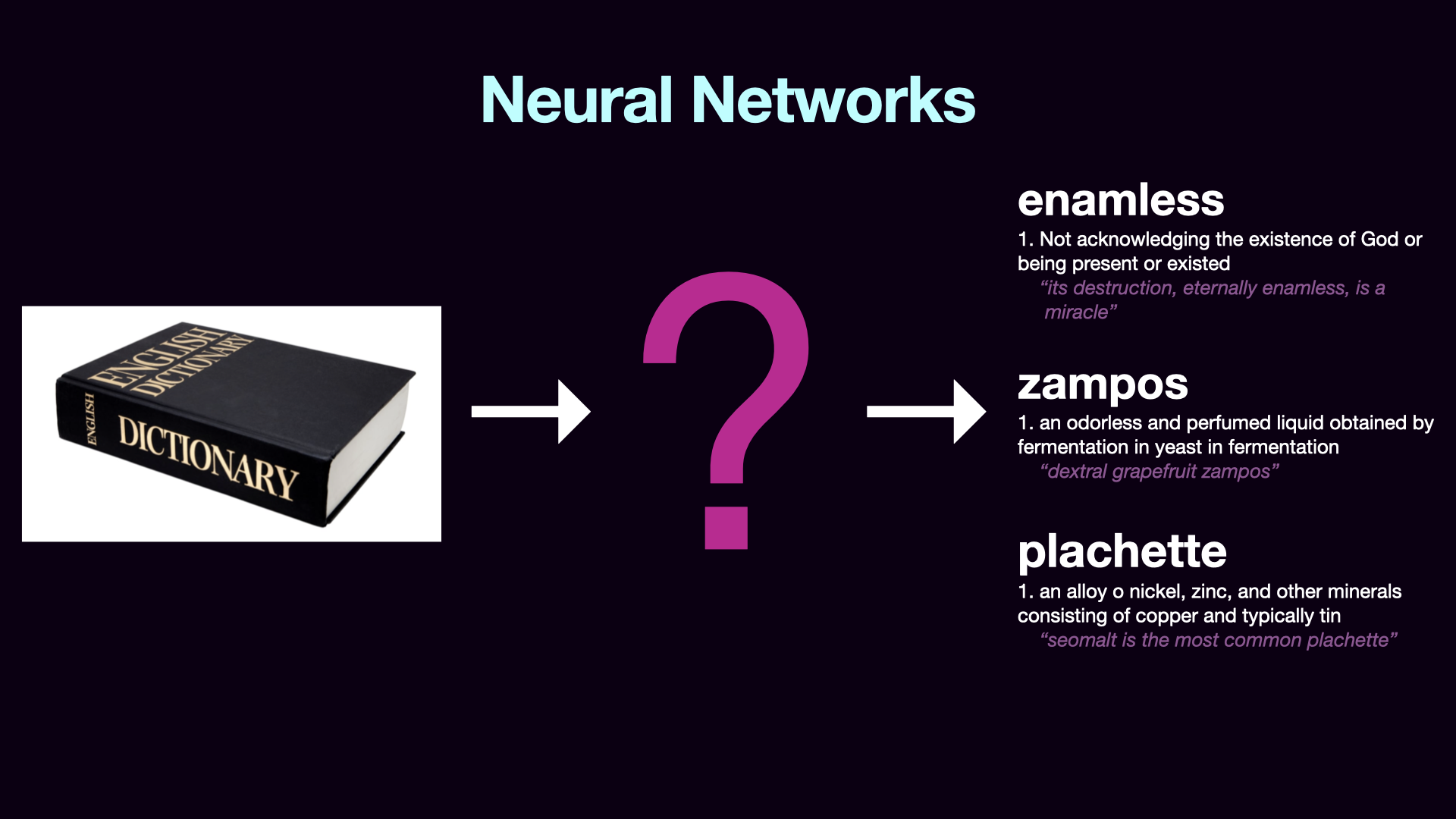

The most common approach in the projects I’ve seen is using neural networks.

|

|

··· | |

|

So taking a closer look at one of these, we can see that they can produce some good looking results, including some extra flourishes like pronunciation and example sentences. But sometimes the definitions are a little garbled, since there’s not an internal structure of meaning behind them. They’re fundamentally imitative, adding letters and words together to try to make things that resemble what you fed into it. |

|

··· | |

|

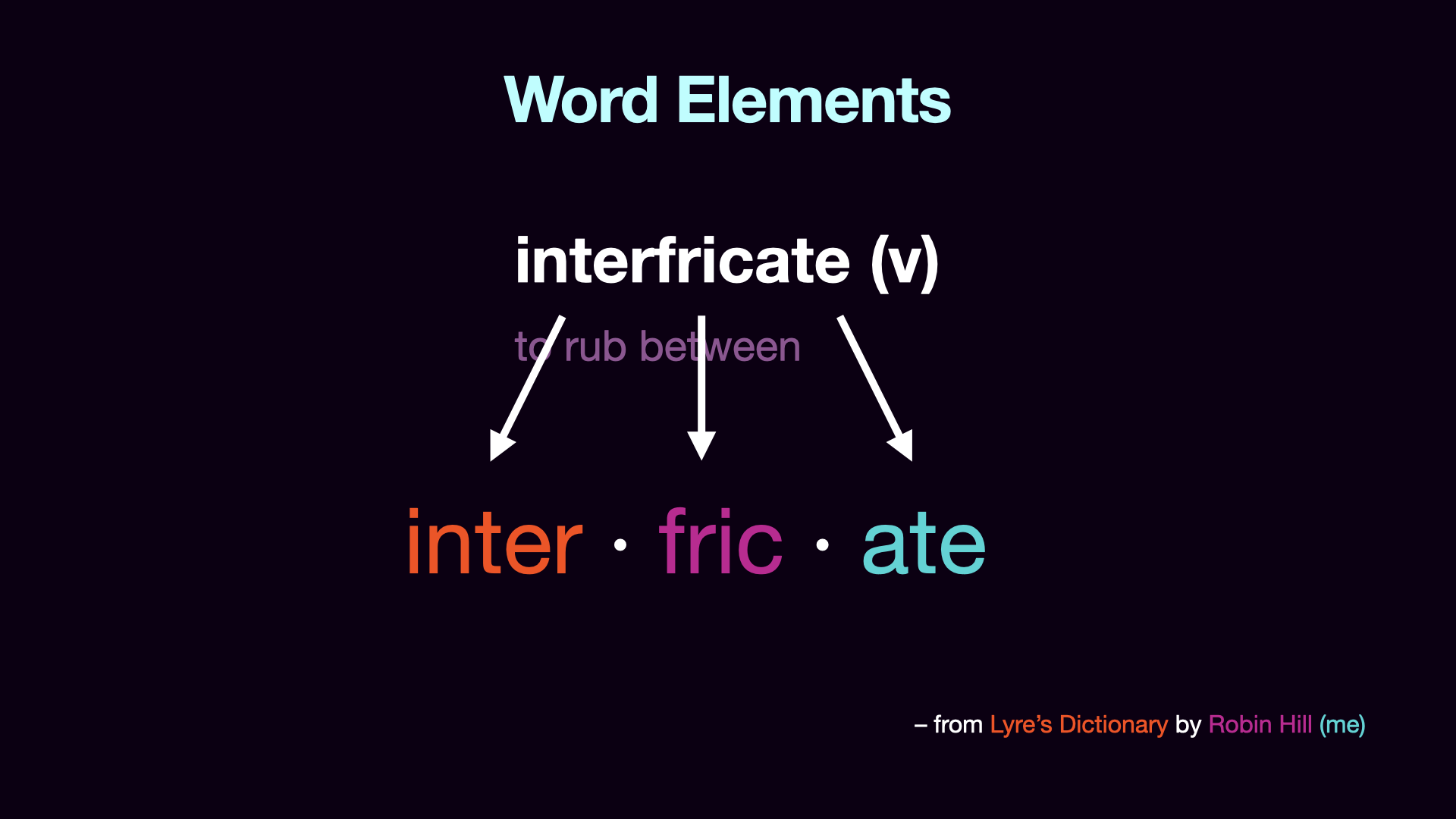

So a different approach is to build words and definitions up out of smaller discrete elements.

|

|

··· | |

|

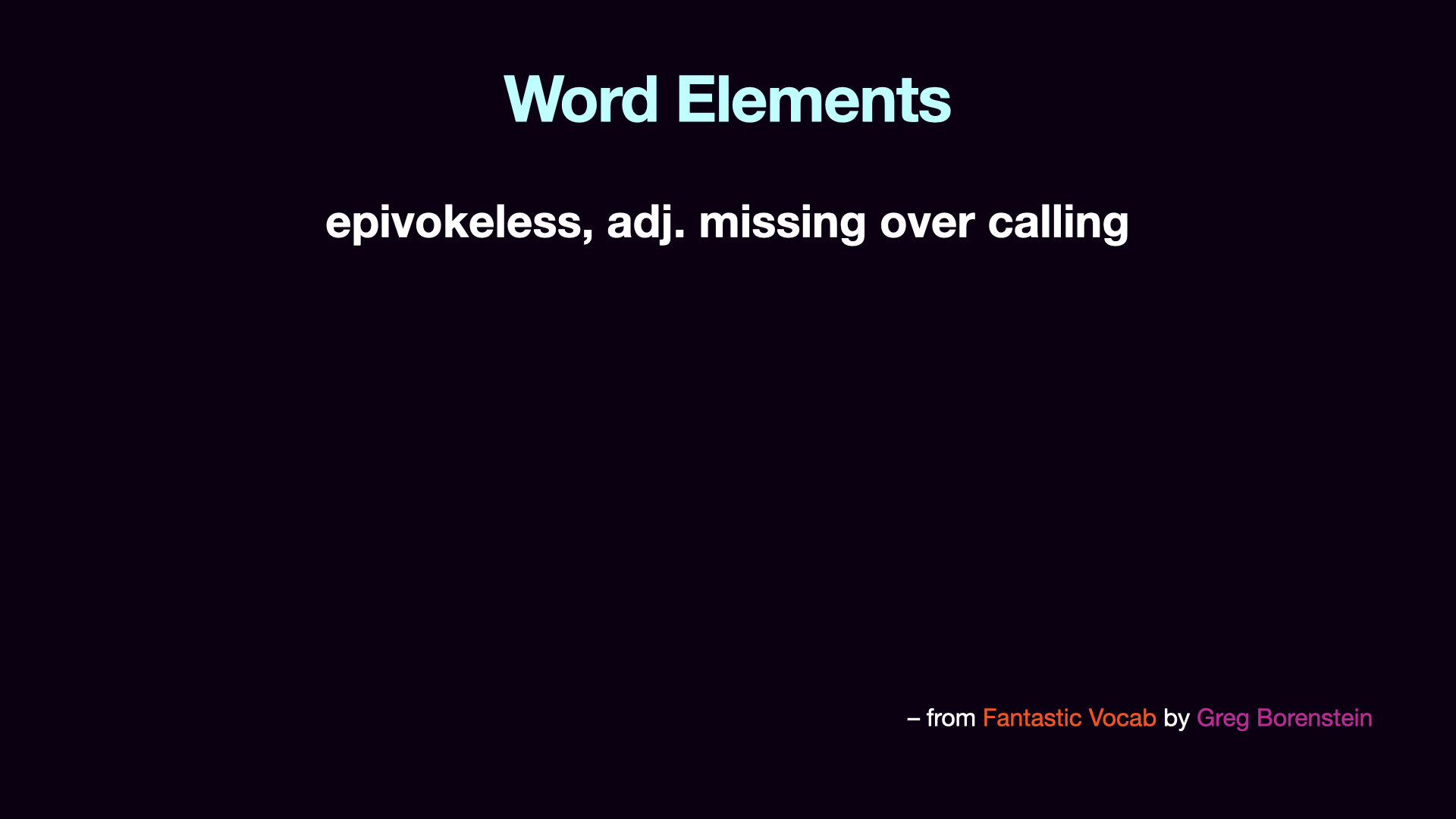

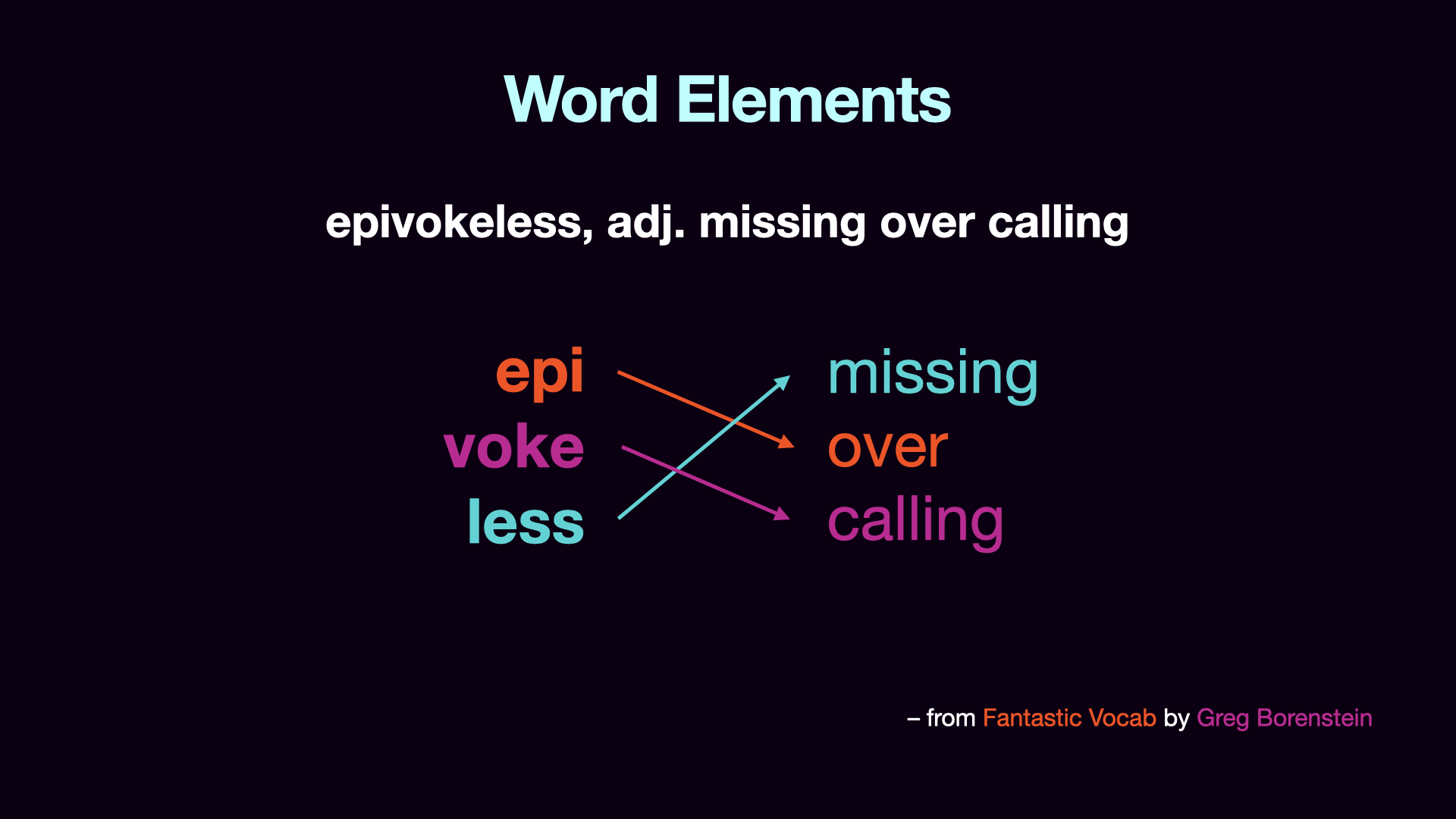

Let’s look at another example of this type: Fantastic Vocab by Greg Borenstein

|

|

··· | |

|

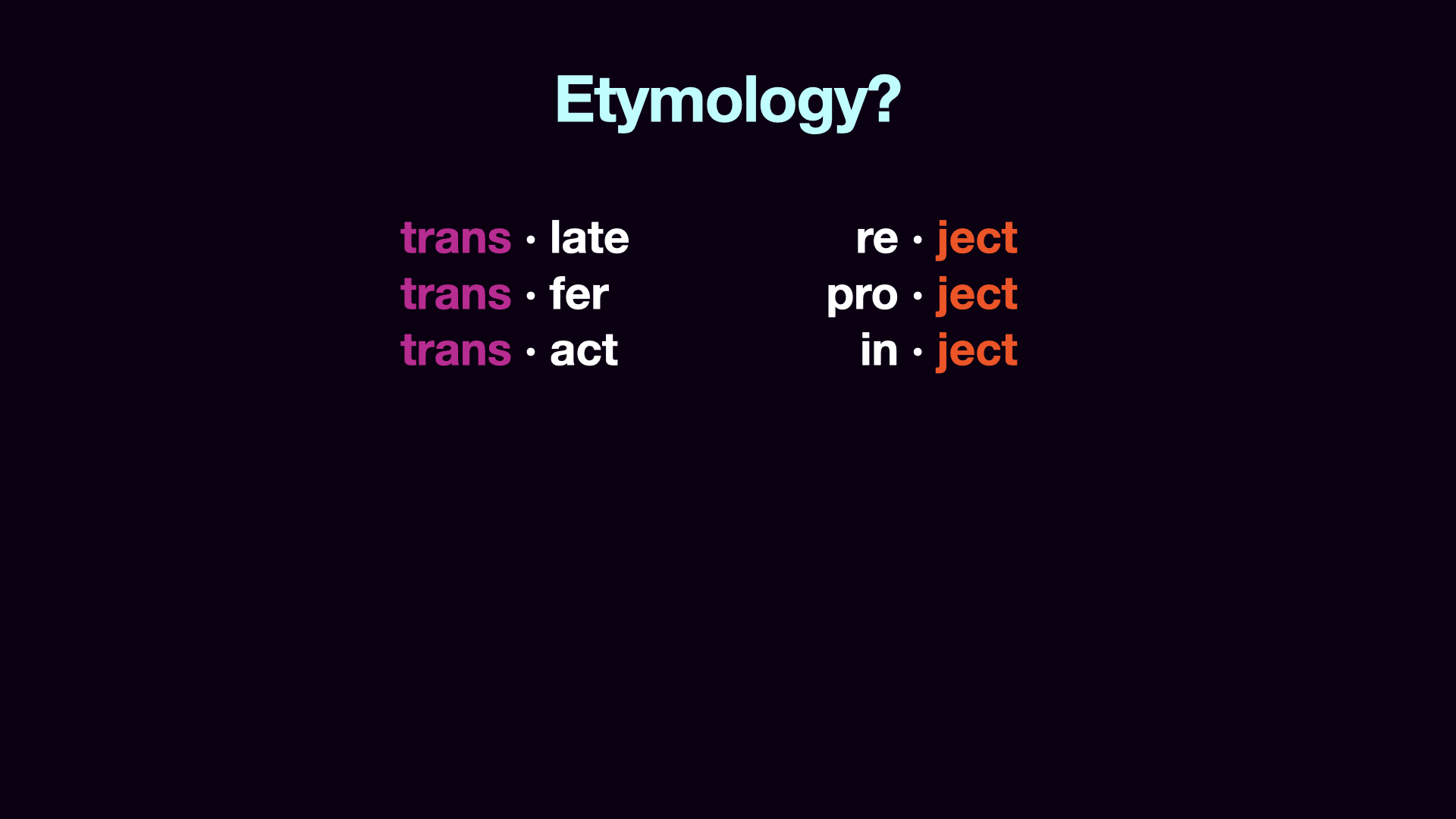

One advantage of working with etymological units is that they are often recognizable. So here we have a few words that all have the “trans-“ prefix, meaning "across", and some with the “ject” root, which comes from a Latin word meaning “to throw”.

|

|

··· | |

|

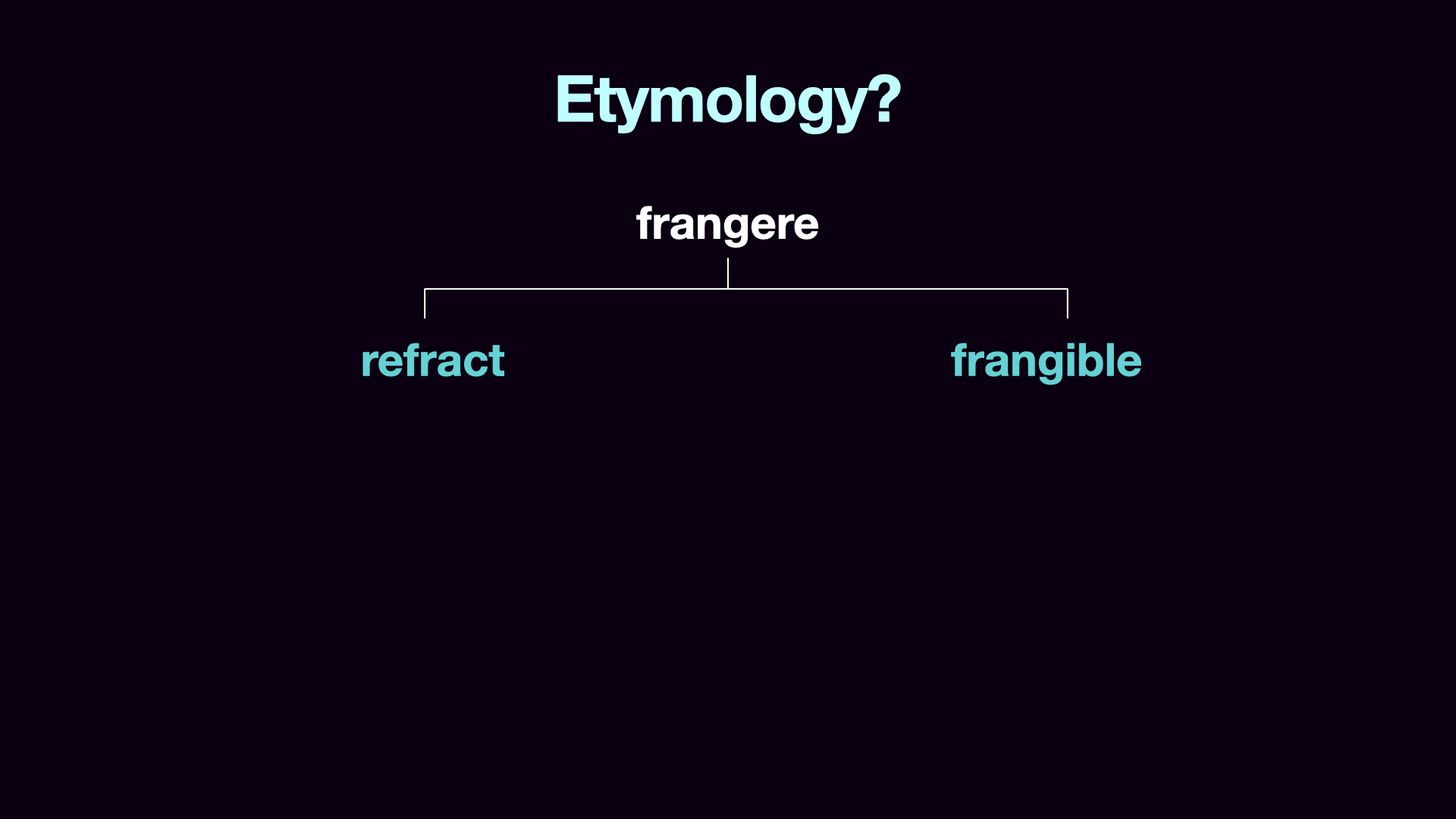

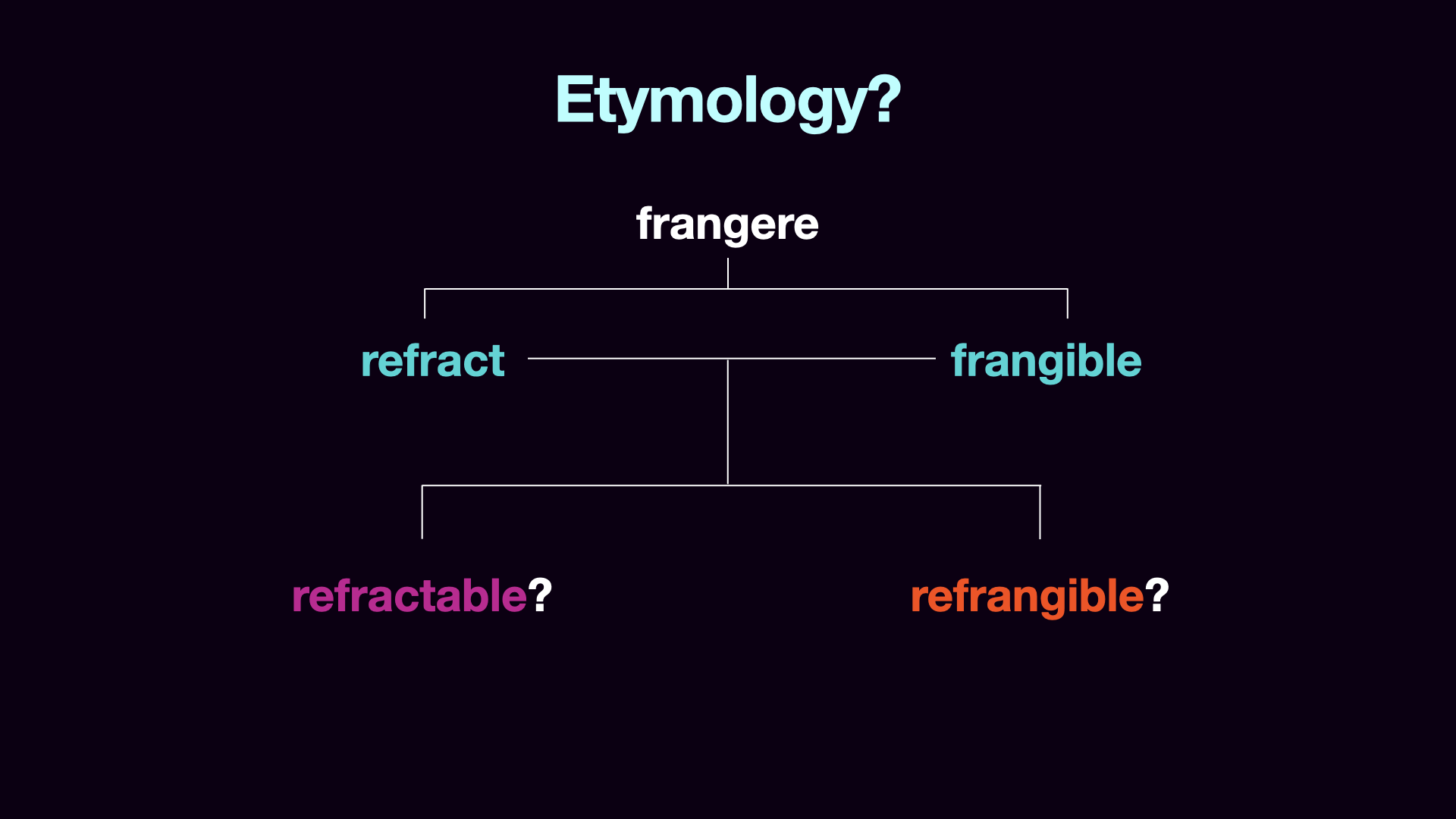

Now let's look at a different example.

|

|

··· | |

|

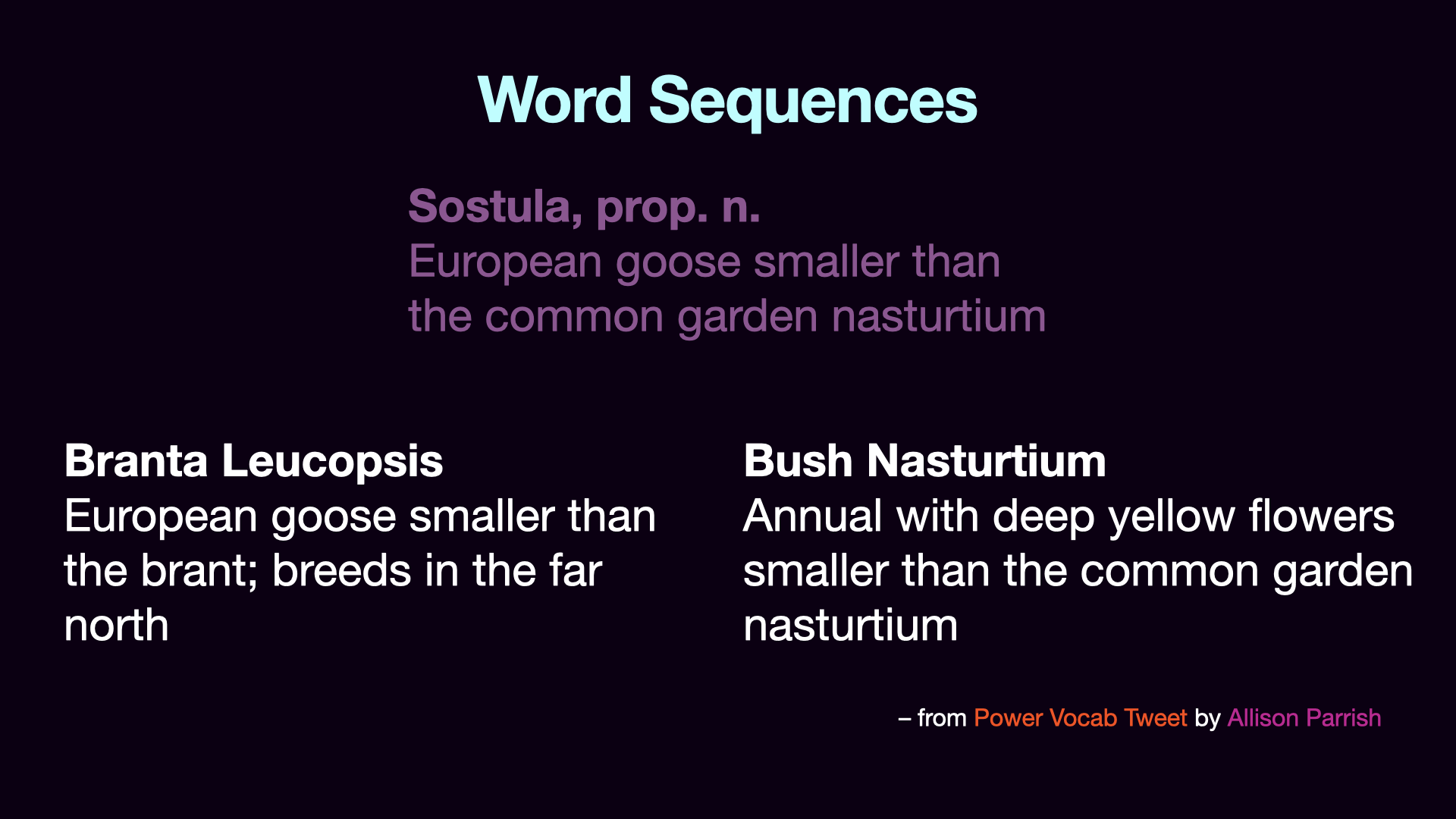

Let’s look at one more technique. This example comes from Power Vocab Tweet by Allison Parrish.

|

|

··· | |

|

Doing some searching, I think I found the real dictionary entries that were combined to make this example (or at least plausible candidates). |

|

··· | |

|

So if we look at the two phrases being combined, each one is a meaningful but not quite completed idea. We have the idea of “A European goose that’s smaller than something”, and “something that is smaller than the common garden nasturtium. And that “smaller than” comparison is a place where they’re joined into a new, whole idea. |

|

··· | |

|

So that’s about all I have time for here. You can read the full list on my web site, at this link.

|

|

❖